Managing AI Strategy: Moving From Capability to Business Impact

Parul Jain

kHUB post date: February 2026

Read time: 8 minutes

Executive Summary

Generative AI is reshaping how organizations approach product innovation. For senior leaders, the challenge is no longer whether to adopt AI, but how to govern it. Many organizations oscillate between two starting points: leading with new AI capabilities or leading with identified customer needs. Framed as a binary choice, this debate misses the real managerial issue.

The differentiator is intent. In this article, intent refers to a strategic commitment to a specific business outcome, expressed through the problem being solved, the role AI is expected to play, and the metrics used to judge the success. Intent is what connects experimentation to results and prevents AI initiatives from drifting into either innovation theater or incremental optimization. This article also outlines how intent shapes investment decisions, success metrics, and scale or stop choices, and why outcomes such as adoption, trust, differentiation, and return on investment matter more than technical progress alone. With intent as a management discipline, AI moves from isolated experimentation to a sustained source of business impact.

Introduction

Generative AI is rapidly expanding what product teams can build. New models enable faster experimentation, richer interfaces, and increasingly autonomous systems. As a result, organizations are embedding AI across design workflows, development pipelines, and customer-facing experiences.

Yet outcomes remain uneven. A recent MIT Media Lab study reveals a sobering reality: despite billions in enterprise investment, 95% of generative AI pilots fail to demonstrate a measurable impact on the profit-and-loss (P&L) statement [1]. Similarly, Robert G. Cooper notes that while AI provides a powerful new lens for discovery, the core drivers of new product success—product advantage and clear value propositions—remain the ultimate arbiters of performance [2]. The difference between success and failure is rarely technical maturity. More often, it lies in how leaders frame decisions about where AI should lead, where human needs should lead, and how success is defined.

This tension is frequently described as a choice between AI-first and human-first product strategies. In practice, this framing is incomplete. Both approaches are valid starting points. What determines impact is not the starting point, but the discipline that connects it to business outcomes. When intent is explicit, AI-first exploration and human-first execution can coexist within a single portfolio. When it is absent, organizations risk confusing activity with progress.

Strategic Starting Points in AI Innovation

Product leaders typically approach AI innovation from one of two starting points.

AI-first, capability-driven innovation begins with technological possibility. Teams ask what a new model, technique, or system can now do that was not previously feasible. This logic fuels exploration and has led to entirely new product categories, from conversational interfaces to generative discovery tools. Its strength lies in expanding the frontier of what is possible.

Human-first, need-driven innovation begins with friction. Teams start by identifying where customers struggle and ask how AI might help solve those problems more effectively. This logic anchors innovation in real-world value and often accelerates adoption.]

Neither starting point is inherently superior. Each carries distinct risks. AI-first initiatives can drift into feature development without a clear path to impact. Human-first initiatives can become overly incremental, optimizing existing experiences while competitors pursue more disruptive moves. The role of leadership is not to choose one over the other, but to govern both within a coherent strategy.

The AI Innovation Governance Matrix

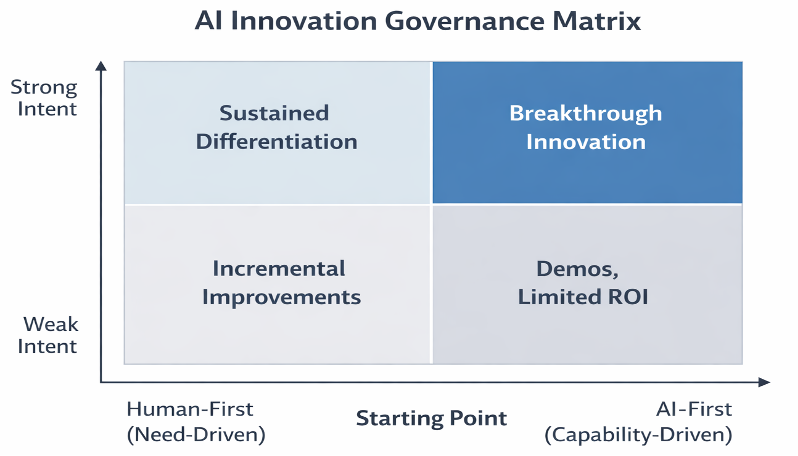

Leaders can govern AI initiatives using a simple two-by-two framework that distinguishes how ideas enter the portfolio and how rigorously outcomes are defined. One axis reflects the starting point: AI-first or human-first. The other reflects the strength of intent: weak or strong.

Figure 1. AI innovation outcomes as a function of starting point and strategic intent

Figure 1. AI innovation outcomes as a function of starting point and strategic intent

This produces four patterns:

- AI-first with weak intent results in technical demonstrations, internal excitement, and limited business impact.

- AI-first with strong intent enables Breakthrough Innovation tied to explicit market or operational outcomes.

- Human-first with weak intent produces safe Incremental Improvements that fail to differentiate.

- Human-first with strong intent drives Sustained Differentiation through adoption, trust, and measurable value.

Each quadrant implies different funding levels, review cadence, and scale or stop criteria. It is a governance tool. Every AI initiative should be placed in one quadrant and managed differently as a result.

Managing the Portfolio: Exploration and Execution

Resource allocation is the central leadership decision in AI innovation. Over investing in exploration wastes capital. Over committing to execution restricts adaptability. High-performing organizations manage AI as a portfolio of investments with different risk and time horizons.

Exploration investments typically align with AI-first initiatives backed by strong intent. These efforts should be small, time-bound, and hypothesis-driven. Funding should be staged, with clear learning goals and explicit criteria for continuation or termination.

Execution investments typically align with human-first initiatives backed by strong intent. These efforts warrant larger investment and are held accountable for adoption, customer impact, and financial results. Intent ensures that both modes remain aligned with strategy rather than drifting into novelty or inertia.

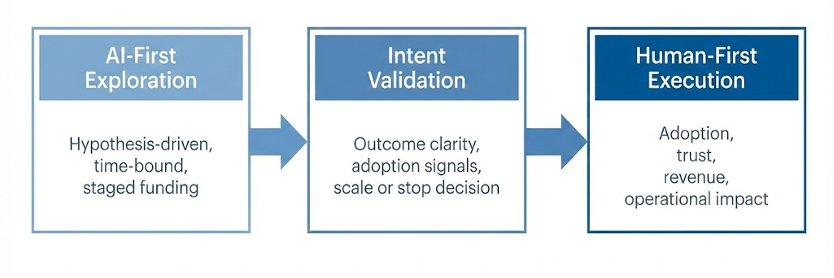

Figure 2. Intent governed transition from exploration to execution

Intent serves as the mechanism that keeps both exploration and execution aligned with strategy rather than drifting into novelty or inertia. AI-first exploration produces signals of feasibility and value, while structured intent review governs whether those signals warrant scaling into human-centered execution or justify termination. Figure 2 depicts this transition, emphasizing that portfolio discipline requires both selective scaling and intentional stopping.

Case Study: AI-First Exploration with Strong Intent

A prime example of moving from AI-first capability to strong-intent outcome is Klarna. In early 2024, the company launched an AI assistant to handle customer service—a classic AI-first exploration. However, the governance was not built on the novelty of chat, but on a clear Intent: managing massive global volume without sacrificing human-level quality.

Within the first month, the AI managed approximately 2.3 million customer service chats, roughly two-thirds of all inquiries [3]. Crucially, the governance focused on Human-First outcomes: customer satisfaction scores remained equal to human agents while resolution times dropped from 11 minutes to less than 2 minutes. The strategic intent was realized in an estimated $40 million annual profit increase [3]. The initiative scaled because it met intent-level metrics—adoption, trust, and ROI—not just because the model functioned technically.

Case Study: AI-First Exploration with Weak Intent

Conversely, the failure of Zillow Offers serves as a cautionary tale. While Zillow’s Zestimate remains a successful informational tool, the company’s attempt to use that same algorithm as the primary engine for its house-flipping business failed because it lacked outcome-based governance. They mistook a high-performing informational capability for a risk-proof execution strategy. When the market shifted, the governance didn't trigger a pivot or a stop until the company had incurred nearly $881 million in losses [4]. This collapse underscores that technical brilliance cannot compensate for a failure to tie AI experimentation to rigorous, outcome-based governance.

Common Failure Modes When Intent is Weak

Three patterns signal misalignment.

- AI-first without intent. Technical uncertainty is mistaken for strategic optionality. Teams continue investing because learning is happening, even when no credible path to customer value exists.

- Human-first without intent. Roadmaps fill with incremental improvements that protect existing offerings while competitors establish new positions.

- Intent without discipline. Leaders articulate strategic ambitions but fail to tie them to metrics, incentives, or decision gates. Activity increases, but outcomes do not.

Governance That Reinforces Intent

Intent must be reinforced through operating mechanisms. Stage gates should test evidence of user value, learning velocity, and path to scale. Every AI initiative should periodically earn one of three outcomes: scale, pivot, or stop. To assist this discipline, leaders can use the following checklist during reviews:

The Intent Checklist

- The Friction: Can you describe the problem this solves without using the words "AI" or "LLM"?

- The Baseline: Do we have a quantitative measure of how the user solves this problem today?

- The Kill-Switch: If the AI is 99% accurate but the business metric doesn't move, are we prepared to stop?

- The Transparency: Does the user have a clear "manual override" or understanding of the AI's logic?

- The Data Moat: Does using this feature create a feedback loop that makes the product harder to copy?

Guiding Questions for Strategy and Portfolio Reviews

Intent must be reinforced continuously. Leaders can keep intent at the center by asking these questions consistently:

- Balance. What share of the roadmap is dedicated to AI-first exploration versus human-first execution?

- Alignment. For each major AI initiative, what specific customer friction is it intended to address?

- Measurement. Beyond efficiency, are we tracking adoption, trust, and differentiation?

- Learning. What have we learned from AI-first exploration that has changed our resource allocation?

- Scale. Which initiatives have earned the right to scale based on evidence rather than momentum?

Conclusion

AI will continue to expand what is possible. Possibility without intent creates noise. The most effective product leaders will not choose between AI-first and human-first strategies. They will integrate both under a discipline that connects capability to business impact. Intent is not a belief; it is a management practice visible in portfolio choices and the willingness to stop work that no longer serves the strategy.

References

[1] MIT Media Lab, "The GenAI divide: State of AI in business 2025," Cambridge, MA, Jan. 2025.

[2] R. G. Cooper, "AI-PRISM: A new lens for predicting new product success," PDMA Knowledge Hub 2.0, Jan. 2025. doi: 10.1111/pdma.12345

[3] Klarna, "Klarna AI assistant handles two-thirds of customer service chats in its first month," Stockholm, Sweden, Feb. 27, 2024. [Online]. Available: https://www.klarna.com/international/press/

[4] S. Woolley, "The Zillow algorithm failure: A lesson in AI-first risk," Harvard Business Review, vol. 99, no. 4, 2022. doi: 10.1234/hbr.2022.09.01

[5] Gartner, "Gartner predicts over 40% of agentic AI projects will be canceled by end of 2027," Stamford, CT, 2025. doi: 10.1016/j.gartner.2025.06.001

ABOUT THE AUTHOR

Parul Jain is a Product Strategy Leader with over a decade of experience driving AI-led innovation and digital transformation across telecommunications, mobility, fintech, retail, and e-commerce. She currently serves as the Vice President of Programming for the PDMA SF Bay Area Chapter, supporting thought leadership and continuous learning within the product community.

Parul actively contributes to the advancement of product management and innovation through mentoring emerging product leaders, advising startups, authoring thought-leadership content on AI and product strategy, and participating as a judge for product and innovation awards. She holds a Master of Science in Product Management from Carnegie Mellon University and a Bachelor’s in Electronics and Communication Engineering.