A strategy for Adopting AI in NPD

Dr. Robert G. Cooper

kHUB post date: September 2025

Read time: 10 minutes

WATCH VIDEO

The AI Paradox

AI is often hailed as a game-changer for new product development (NPD), promising dramatic efficiency gains, faster time-to-market, and superior product outcomes [1]. Yet behind the headlines, adoption tells a different story. While early adopters—mostly large firms with substantial budgets and large IT departments—report transformative results, most organizations are still moving slowly.

![Figure 1. Current and expected use of AI for various tasks or applications in NPD [2,4] Figure 1. Current and expected use of AI for various tasks or applications in NPD [2,4]](https://higherlogicdownload.s3.amazonaws.com/PDMA/1f7c783a-7a67-458e-8dc0-47c8434a66fd/UploadedImages/Strategy/ai-cooper-sept25-1.png)

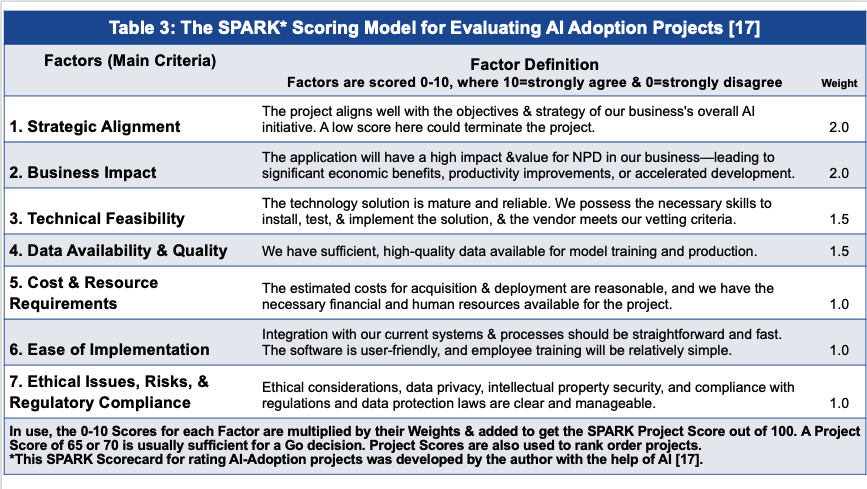

Figure 1. Current and expected use of AI for various tasks or applications in NPD [2,4]

Before going further, it’s important to clarify what we mean by “AI adoption.” This article is not about the tech firms that create and sell AI software and capture stock market attention. Instead, the focus is on non-AI companies—manufacturers, banks, consumer goods, chemical firms—that acquire AI tools, typically by purchasing or licensing software, and apply them in their business. That might mean improving marketing, optimizing supply chains, or, the focus here, using AI in NPD—for example, to generate ideas or support product design.

Despite the promise, AI adoption in NPD has been disappointingly slow. Recent research shows that only 23% of firms used AI for any NPD task in early 2024 [2], rising only slightly to 28% by 2025 [3]. Across the full spectrum of NPD activities—from idea generation through launch—AI adoption averages just 5% per task in the U.S. and EU (Figure 1) [2].

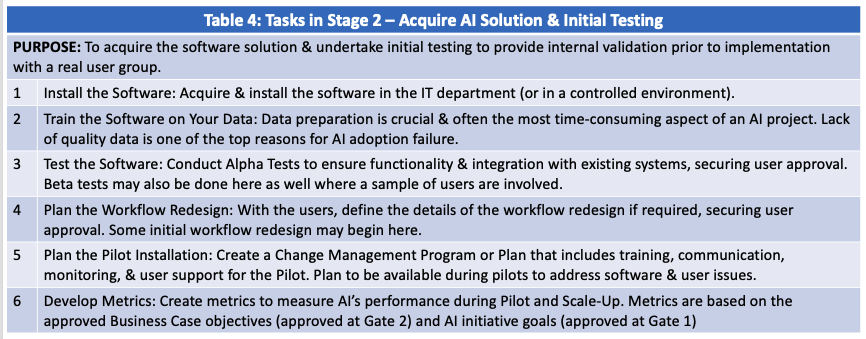

Why so sluggish? The same study highlights the major drivers of adoption (purple bars in Figure 2). The strongest predictor is clear: when firms achieve visible positive results from their first AI applications, adoption accelerates. In fact, this single factor is so strongly correlated that it accounts for 50% of “extensive usage of AI in NPD.

![Figure 2. The drivers of AI adoption in NPD, their importance, and how businesses fare on each [4] Figure 2. The drivers of AI adoption in NPD, their importance, and how businesses fare on each [4]](https://higherlogicdownload.s3.amazonaws.com/PDMA/1f7c783a-7a67-458e-8dc0-47c8434a66fd/UploadedImages/Strategy/ai-cooper-sept25-2.png)

Figure 2. The drivers of AI adoption in NPD, their importance,

and how businesses fare on each [4]

Other drivers include strong top-management commitment, the presence of an executive sponsor, and trust in AI’s capabilities. Unfortunately, firms generally score low on these same drivers—for example, only 5% report seeing positive results from AI in NPD to date (turquoise bars in Figure 2).

The Failure Rate Crisis

The fundamental obstacle blocking AI adoption is its alarmingly high failure rate:

- McKinsey research shows 80% of enterprises using generative AI report no meaningful bottom-line impact [3].

- RAND Corporation reports over 80% of AI adoption projects fail—double the failure rate of traditional IT projects [4].

- A Harvard Business Review article estimates AI adoption project failure rates reach 80%, with only 14% of companies ready for AI adoption [5].

The "pilot paralysis" phenomenon is epidemic in AI adoption projects [6]. Pilot paralysis occurs when companies acquire AI software, launch pilots, achieve mediocre results, then abandon the project. Research indicates only 13–20% of AI adoption projects successfully transition from prototype to production [7]. The pattern is accelerating: 42% of firms abandoned most AI adoption initiatives in 2025, up dramatically from 17% in 2024, according to S&P Global Market Intelligence [8].

Why AI Adoption Projects Fail

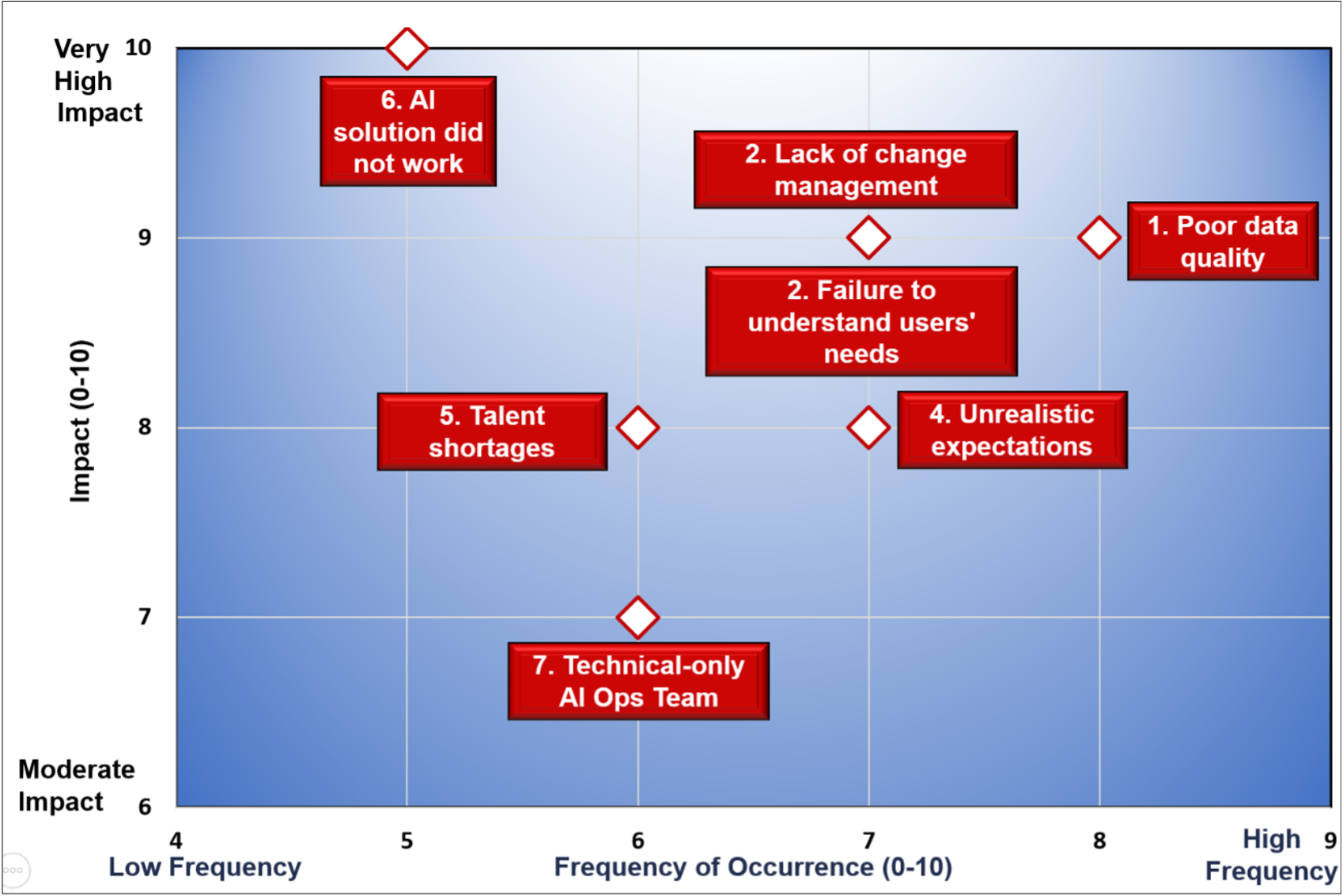

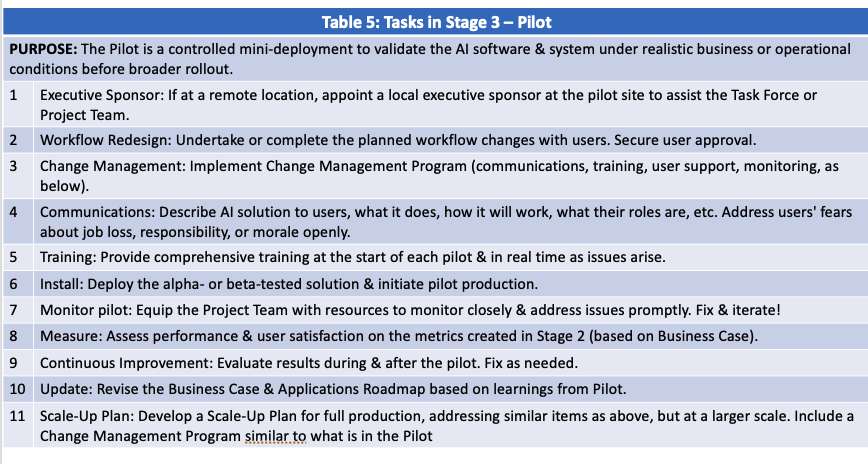

While AI-adoption failure hasn't been rigorously studied, emerging research combined with expert opinion reveals predictable fail-points (Figure 3) [9]. Many mirror classic NPD failure patterns: a failure to understand user needs, absent cross-functional teams, and the product did not work. Each failure cause has identifiable remedial actions—if firms recognize the potential pitfalls in advance!

The real fail-point isn’t the AI solution itself, but rather how firms deploy AI—“adoption and workflow redesign challenges”, according to the McKinsey report [3]. Most firms “aren’t yet implementing the adoption and scaling practices that we know help create value,” and “only 1 percent of company executives describe their generative AI rollouts as ‘mature’.”

This pattern echoes NPD's evolution. Early product development suffered similarly high failure rates, sometimes reaching 90%. The 1960s and 1970s studies on "why new products fail" revealed remarkably similar patterns to those in Figure 3.

Figure 3. The 7 failure reasons for AI adoption—showing both their impact and frequency of occurrence

The solution involved identifying and implementing proven best practices: for example, empowered cross-functional teams, Voice of Customer research, and iterative build-test-learn cycles. These practices crystallized into structured processes like the Stage-Gate® methodology [10].

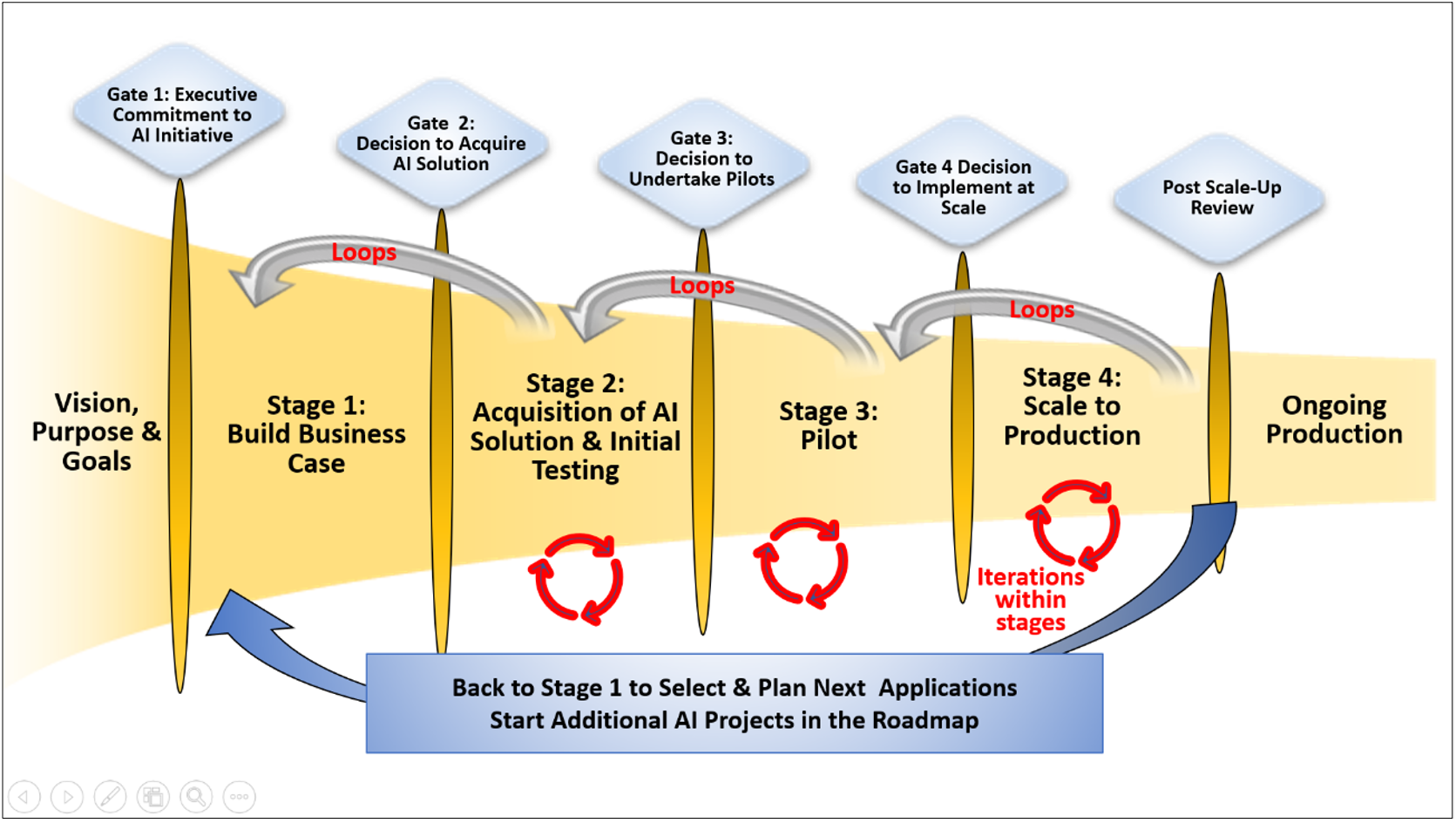

The RAPID Process for AI Adoption Projects

Figure 3. The RAPID Adoption & Deployment Process for AI—

Roadmap for AI Procurement, Implementation, and Deployment

The RAPID Process is a four-stage, four-gate AI adoption model, shown in Figure 4 [ ]. Its stages incorporate best practices designed to mitigate the common failure reasons, while its gates are designed to stop bad AI projects and redirect resources to higher value applications. While the focus of this article is on AI for NPD, the RAPID process is fairly universal, and with minor changes, can be used to adopt and deploy AI across the entire business, not just for NPD.

A recent IBM report argues that a “stage gating” model can facilitate AI rollout, and outlines their suggested seven-stage gated process for AI deployment [12]. Some companies are indeed using a more structured and gated approach to rolling out AI in their firms, namely their Stage-Gate® NPD process, but in modified form. This is not a new concept: leading firms—Exxon, 3M, Kellogg’s—had for years adapted their traditional new product gating process to handle new technology adoption and deployment [13].

RAPID is based on three bodies of knowledge:

- The reasons for AI adoption failure, and the needed remedial actions to deal with these failure reasons in Figure 3 [9].

- Best practices found for AI rollout in studies done by McKinsey and others [3, 5].

- Principles in the Stage-Gate® process used by firms for deploying new technologies [14, 15].

Individual companies may wish to simplify the model depending on the magnitude of their AI adoption projects.

The RAPID Process Stage-by-Stage

Here is a detailed look at the RAPID model (you can follow in Figure 4):

Pre-Stage: Establish Mission, Vision, and Goals: The first step is for senior management to make a commitment to implement AI and to designate an executive sponsor. Both are strongly correlated with extensive AI adoption and thus are critical, as seen in Figure 2. A vision, goals, and objectives for the AI initiative—whether it be for one department such as NPD, or across the entire corporation—are agreed upon.

Resources must be put in place. A cross-functional team or AI Task Force should be assembled, reporting directly to the leadership team, with representatives from the user departments as well as from the IT Group. Add external expertise, for example, an experienced outside consultant.

This “top-down” approach with the leadership team heavily involved is crucial: AI adoption is not a “ground-up initiative” with individuals in the company adopting AI on a piecemeal basis. This initiative must be driven by the leadership team [3].

Gate 1 in Figure 4 follows and is simply a confirmation of the leadership team’s commitment to the AI initiative with the AI Task Force, and agreement regarding vision and goals.

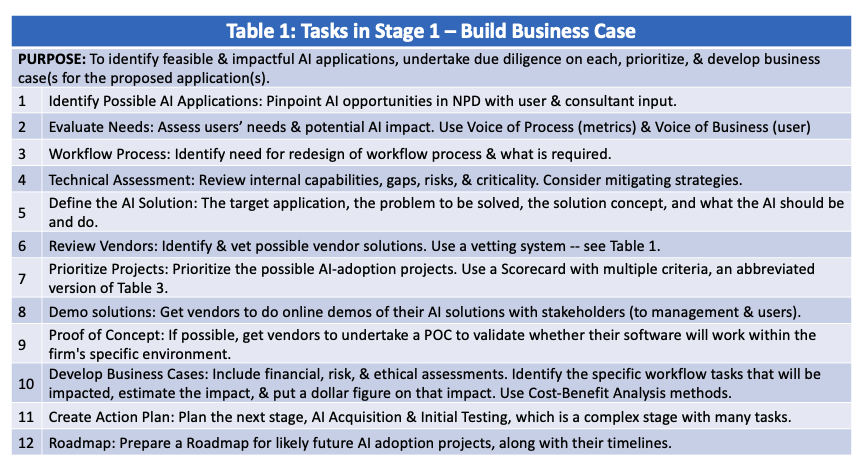

Stage 1: Build Business Case. Weak business cases—poorly researched and constructed—frequently doom AI projects by allowing poor projects with minimal impact to proceed. Strong, fact-based business cases prove essential for identifying high-value projects; critical Stage 1 tasks are listed in Table 1.

Vital tasks include conducting Voice of Process (VoP) and Voice of Business user (VoB) studies to understand the problem to be resolved and the users’ needs for AI; deficiencies here represent major AI failure causes. With this insight, define the AI solution concept—what the AI should be and do. This VoP and VoC research also may point to needed redesign of workflow processes.

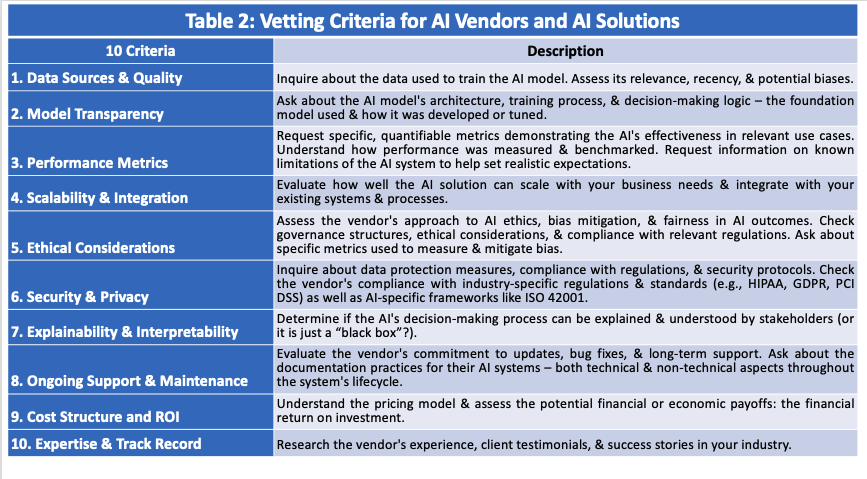

Equally important is testing and validating possible software solutions early in the process. Identify and vet possible AI vendors, using a set of vendor vetting criteria shown in Table 2. Undertake online AI demos and, if possible, proof-of-concept (POC) trials—a trial of the vendor’s software to validate whether it will work in the firm’s environment. Firms use POC trials to evaluate functionality, integration capabilities, user adoption potential, and hidden implementation costs.

Stage 1 outputs include a prioritized short list of proposed AI-adoption projects with respective business cases and action plans. A second output is a strategic roadmap encompassing future AI initiatives—ensuring a strategic rather than merely a tactical approach.

Gate 2: Decision to Acquire the AI Solution. At this point, a critical management decision is made, namely Gate 2, the decision to purchase, license, or internally develop some or all of the proposed AI software solutions. If internal development is the route, then the project shifts to the firm’s internal IT methodology; the majority of AI adoption projects involve acquiring software from an outside vendor, however.

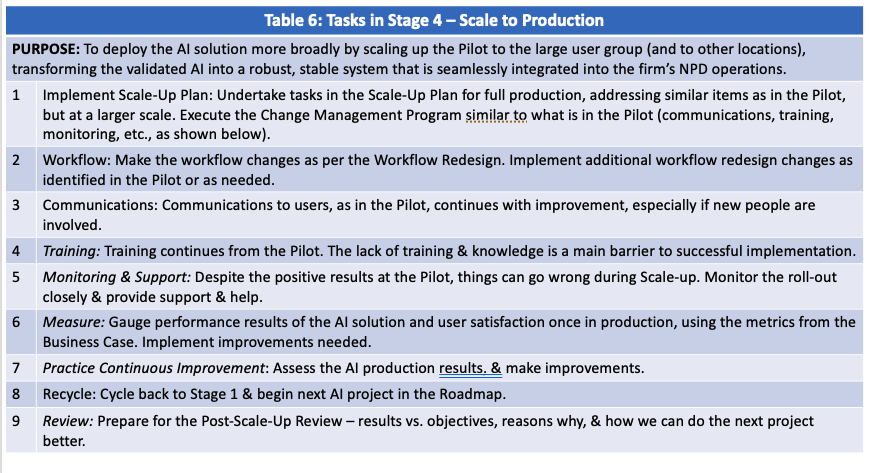

Gate 2 is a difficult investment decision because often the information in the business case is uncertain and fluid. History has shown that managers in NPD facing similar decisions with uncertain information get less than 50% of the decisions correct [16]; thus, a more structured decision approach should be used here, such as the SPARK Scorecard in Table 3 [17]. The leadership team individually scores the project on the seven criteria shown in Table 3, discusses their scores and differences of opinion, and makes a Go/No-Go decision.

Stage 2: Acquisition of the AI Solution and Alpha Testing. The AI solution is acquired and the IT department conducts alpha testing internally in a controlled environment. This task involves internal testers, developers, and IT staff testing core functionality and stability before external exposure. A Beta test, which exposes the software to a select group of actual users, may also be done here.

This sequential testing approach—POC, alpha, beta, and pilot—allows the business to identify technical issues early when they’re less expensive to resolve. Depending on the size and number of AI projects, the Task Force may divide into specific project teams, still cross-functional.

Details of Stage 2 are in Table 4. The output of this stage are the results of the alpha test and the action plans for the pilot tests in the next stage. Additionally, put metrics in place to gauge the performance of the AI.

Stage 3: Pilots. This is the critical test phase with real users in the company, where so many projects become stalled. Pilot implementation is structured as a controlled mini-deployment: the goal is to validate the software under realistic business or operational conditions before broader rollout. Pilots allow the business to gather user feedback, identify improvement opportunities, and refine implementation strategies before full deployment.

A number of tasks are essential to ensure success, as noted in Table 5, including training, support, and redesigning of workflow processes. Multiple iterations occur here as the results of the pilot may not initially be positive, and adjustments or even a full pivot (loop back to the previous stage) may be needed. A complete rollout plan is also developed, as this too is a deficient area: the change management effort is often underestimated.

Stage 4: Scale to Production. This vital stage involves implementing the scale-up plan, including change management, communications, and training. Typical tasks are in Table 6. The AI’s performance is monitored versus the metrics established in Stage 2, and the necessary fixes are undertaken. Once the AI solution is working well, the task force or project team cycles back to Stage 1 and starts moving the next project in the roadmap forward.

Ongoing Production. While technically not a stage in the RAPID Process, the project team is responsible for monitoring the project once in full production, ensuring that targets are hit, and initiating continuous improvements.

Start the Journey Now... But Use a Map

The journey to adopt and deploy AI is indeed a long one and fraught with difficulties. Most businesses that set out are currently unsuccessful. But ultimately, they must successfully reach the AI adoption destination. AI is here, and as with new technologies in previous industrial revolutions, it is changing the face of industry and society.

Beginning any journey without an understanding of the pitfalls along the way, and without a map to guide you, is not wise. The RAPID Process is such a map: It’s a proven methodology for implementing new technologies, such as AI, not only in NPD but across the business. Employing RAPID for AI adoption and deployment projects will not guarantee success, but it certainly will heighten the odds of reaching the desired destination.

References

[1] Robert G. Cooper, “The Artificial Intelligence Revolution in New-Product Development,” IEEE Engineering Management Review 52(1), (2024): 195–211. DOI: 10.1109/EMR.2023.3336834. Link is article #1 at: https://bobcooper.ca/articles/artificial-intelligence-in-npd

[2] Robert G. Cooper and Alexander M. Brem, “The Adoption of AI in New Product Development: Results of a Multi-firm Study in the US and Europe,” Research-Technology Management 67(3), (2024): 33–54: (2024). DOI: 10.1080/08956308.2024.2324241. Link is article #8 at: https://bobcooper.ca/articles/artificial-intelligence-in-npd

[3] McKenzie & Co., “The State of AI: How Organizations Are Rewiring to Capture Value,” QuantumBlack AI, (March 12, 2025). https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai#/

[4] James Ryseff, Brandon F. De Bruhl, Sydne J. Newberry, “The Root Causes of Failure for Artificial Intelligence Projects and How They Can Succeed.” The RAND Corporation Report, (Aug. 13, 2024). Link: The Root Causes of Failure for Artificial Intelligence Projects and How They Can Succeed: Avoiding the Anti-Patterns of AI | RAND

[5] Iavor Bojinov, “Keep Your AI Projects on Track,” Harvard Business Review Magazine (Nov.-Dec., 2023). Link: https://hbr.org/2023/11/keep-your-ai-projects-on-track

[6] R. Gregory, “Overcoming Pilot Paralysis in Digital Transformation,” Weatherhead School of Management, Case Western Reserve University (April 27, 2021). Link: Overcoming Pilot Paralysis in Digital Transformation | CWRU Newsroom | Case Western Reserve University

[7] J. Masci, “AI Has a Poor track Record, Unless You Clearly Understand What You’re Going For,” Industry Week (Jan. 19, 2022). Link: AI Has a Poor Track Record, Unless You Clearly Understand What You’re Going for | IndustryWeek

[8] S&P, “Generative AI Experiences Rapid Adoption, But With Mixed Outcomes – Highlights from VotE: AI & Machine Learning,” S&P Global (May 30, 2025). Link: https://www.spglobal.com/market-intelligence/en/news-insights/research/ai-experiences-rapid-adoption-but-with-mixed-outcomes-highlights-from-vote-ai-machine-learning

[9] Robert G. Cooper, “Why AI Projects Fail: Lessons From New Product Development,” IEEE Engineering Management Review (July, 2024). DOI 10.1109/EMR.2024.3419268 Link is article #10 at: https://bobcooper.ca/articles/artificial-intelligence-in-npd

[10] Robert G. Cooper, “The 5-th Generation Stage-Gate Idea-to-Launch Process,” IEEE Engineering Management Review, (50),4 (Dec. 2022): 43–55. DOI: 10.1109/EMR.2022.3222937. Link is article #3 at: https://bobcooper.ca/articles/next-generation-stage-gate-and-whats-next-after-stage-gate

[11] Robert G. Cooper, “Adopting AI for NPD: A Strategic Roadmap for Managers,” Research-Technology Management (68):3, (April 2025): 41-46. DOI: 10.1080/08956308.2025.2466980. Link is article #23 at: https://bobcooper.ca/articles/artificial-intelligence-in-npd

[12] Suman Gidwani and Mayank Bhattarai,” Measuring AI Outcomes for Business Success: A 7-Step Stage Gating Framework,” IBM (July 10, 2025). Link: 7-step stage gating framework for measuring AI outcomes | IBM

[13] Robert G. Cooper, “Managing Technology Development Projects,” Research-Technology Management, 49(6) (2006): 23–31.

[14] Hans Högman and Ulf J. Johannesson, “Applying Stage-Gate Processes to Technology Development—Experience From Six Hardware-Oriented Companies,” Journal of Engineering Technology Management, 30, (2013): 264–287. https://doi.org/10.1016/j.jengtecman.2013.05.002

[15] Lercher, “Big Picture—The Graz Innovation Model,” CAMPUS 02, University of Applied Sciences, Germany, (May 11, 2017, last revised May 20, 2021). Big Picture - The Graz Innovation Model by Hans Lercher :: SSRN

[16] Mette P. Knudsen, Max von Zedtwitz, Abbie Griffin, and Gloria Barczak. “Best Practices in New Product Development and Innovation: Results From PDMA’s 2021 Global Survey,” Journal of Product Innovation Management, (40) 3, (Jan. 27, 2023): 57–275. DOI: 10.1111/jpim.1266

[17] Robert G. Cooper, ”Making Go/Kill Decisions on AI Applications for NPD,” IEEE Engineering Management Review, Pre-print, (Jan. 1, 2025). DOI: 10.1109/EMR.2024.3519998. Link is article #17 at: https://bobcooper.ca/articles/artificial-intelligence-in-npd

ABOUT THE AUTHOR

Dr. Robert Cooper, Professor Emeritus, McMaster University, Canada ISBM Distinguished Research Fellow at Penn State University

A world expert in the field of management of new-product development and product innovation, Dr. Cooper has written 10 books on the topic and more than 150 articles. Bob is the creator of the globally-employed Stage-Gate (trademarked) process used to drive new products to market; a Fellow of the Product Development & Management Association; ISBM Distinguished Research Fellow at Penn State University. He is a noted consultant and advisor to Fortune 500 firms, and also gives public and in-house seminars globally.